I was recently reading through some of my backlog of Dr. Dobb’s Journals (a PDF I receive monthly) and came across an article entitled The Requirements Payoff by Karl Wiegers. I’ve archived a copy of the PDF here and it can be found on pages 5-6.

I was recently reading through some of my backlog of Dr. Dobb’s Journals (a PDF I receive monthly) and came across an article entitled The Requirements Payoff by Karl Wiegers. I’ve archived a copy of the PDF here and it can be found on pages 5-6.

A lot of people seem to equate “requirements gathering” with “big design up front”, which is now often vilified as being antiquated and bloated. Nothing could be further from the truth (about requirements, not BDUF). In the book Agile Principles, Patterns, and Practices in C#, Uncle Bob talks about practicing Agile in a .Net world. In every one of his examples, you have to know what your requirements are ahead of time. The process goes as follows:

- Gather requirements

- Estimate requirements to determine length of project

- Work requirements in iterations

- Gauge velocity in coding requirements against estimate

- Determine whether your velocity requires you to either cut requirements or extend timelines

- Lather, rinse, repeat

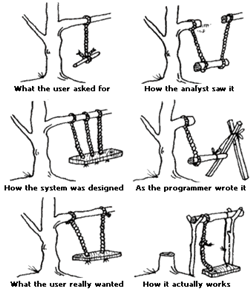

You see that the agile process doesn’t work without requirements. In the article, Wiegers says that according to a 1997 study, “Thirty percent or more of the total effort spent on most projects goes into rework, and requirement errors can consume 70% to 85% of all project rework costs”. That is a big penalty to pay for laziness or ineptitude in early requirements gathering. This doesn’t mean that the requirements collected at the beginning are rigid and inflexible. They are just a starting point. However, changing the requirements changes the estimate and also the expected cost in time and resources and can fall under the statistic quoted above.

Requirements are essential to TDD and BDD advocates, as well. The requirements are what a vast majority of the tests are written against. For instance, if the requirement is that the user name is an email address then a programmer will likely write a test (both positive and negative) verifying that the program behaves as expected when presented with an email address or a non-email address, etc. Without that requirement, a test might only be written to ensure the user name wasn’t blank and the stake-holders might be upset when the program behaves differently than they imagined in their heads (and you failed to ferret out in requirements gathering).

It is true that gathering proper requirements in advance costs time. Wiegers sums it up best, however, when he says, “These practices aren’t free, but they’re cheaper than waiting until the end of a project or iteration, and then fixing all the problems. The case for solid requirements practices is an economic one. With requirements, it’s not ‘You can pay me now, or you can pay me later.’ Instead, it’s ‘You can pay me now, or you can pay me a whole lot more later.'”

Couldn’t agree more. Keep requirement gathering to a single iteration and expect it to change many times throughout the iterations.

I personally like the term ‘acceptance criteria’ a little more because it is suggestive of its initial purpose (the things that will make this ‘done’). Nothing like having a list of things to the effect of:

The feature must do –

* Item A

* Item B

* Item C

only to find out (once you have provided items A-C) that:

* Item A actually needs to change to act this way

* Item B should include a few extra things

* Item C should function in entirely differently

* Oh, and we added Items D-G

The second bullet list would be a new iteration (and technically a new feature) and would allow you to see which process (the initial implementation or the follow up work) is costing you all of your added time.

At least with this you can track WHY it took you 2 weeks to complete a task that was only supposed to take 2 days. From there you can easily track the value added by such features to the actual cost and decide if something in the process needs to change (i.e. Better initial requirement gathering or better use of coders time (maybe he/she is being pulled into too many meetings or other productivity drainers)).

The problem I have witnessed all too often is that requirements are left ‘undefined’ so when they become defined (usually half way into the dev process) they add unmeasurable scope to a feature.