For this next post in my series on the new features in C# 6, I’m going to cover the using static syntax. This feature is also 100% syntactic sugar, but it can be helpful. Unfortunately, it also comes with quite a few gotchas.

For this next post in my series on the new features in C# 6, I’m going to cover the using static syntax. This feature is also 100% syntactic sugar, but it can be helpful. Unfortunately, it also comes with quite a few gotchas.

I’m sure all of us are familiar with writing code like you see below. The WriteLine() method is a static method on the static class Console.

using System;

namespace CodeSandbox

{

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Hello World");

}

}

}

Because Console is a static class, we can treat it as a “given” and just now include it in our using statements. Console.WriteLine() is actually System.Console.WriteLine() if we include the namespace. However, since we are including “using System;” at the top of the file, we don’t have to type it out. This is the exact same concept.

using static System.Console;

namespace CodeSandbox

{

class Program

{

static void Main(string[] args)

{

WriteLine("Hello World");

}

}

}

Now instead of just “using”, I’ve included “using static” and included the full name of the static class, in this case System.Console. Once that is done, I’m free to just call the WriteLine() method as if it were locally scoped inside my class. This is all just really syntatic sugar. When I use Telerik’s JustDecompile to analyze the program and give me back the source, this is what I get.

using System;

namespace CodeSandbox

{

internal class Program

{

public Program()

{

}

private static void Main(string[] args)

{

Console.WriteLine("Hello World");

}

}

}

If we dig even further into the IL, you can see that it agrees that we are still just making a call to WriteLine() inside of the System.Console class.

.method private hidebysig static void Main (

string[] args

) cil managed

{

.entrypoint

IL_0000: nop

IL_0001: ldstr "Hello World"

IL_0006: call void [mscorlib]System.Console::WriteLine(string)

IL_000b: nop

IL_000c: ret

}

Okay, that makes sense. Seems potentially helpful. Where do these promised “gotchas” come in? Our issues start to arise when you have multiple “using static” declarations in a file. If those static classes have methods inside them with the same name, conflicts can happen. Let’s take a look at one place where this can absolutely happen in a commonly used area of .Net.

using System.IO;

namespace CodeSandbox

{

class Program

{

static string fakeSourceLocation = @"c:\";

static string fakeBackupLocation = @"c:\backup\";

static void Main(string[] args)

{

foreach (var fileWithPath in Directory.GetFiles(fakeSourceLocation))

{

var fileName = Path.GetFileName(fileWithPath);

var backupFileWithPath = Path.Combine(fakeBackupLocation, fileName);

if (!File.Exists(backupFileWithPath))

{

File.Copy(fileWithPath, backupFileWithPath);

}

}

}

}

}

In this simple contrived example, I’m iterating through all of the files in a directory and if they don’t exist in a backup location, I copy them there. This doesn’t deal with versioning, etc, but it does give us some basic System.IO examples to work with. Now, if I try to simplify the code like this, I get an issue.

using static System.IO.Directory;

using static System.IO.File;

using static System.IO.Path;

namespace CodeSandbox

{

class Program

{

static string fakeSourceLocation = @"c:\";

static string fakeBackupLocation = @"c:\backup\";

static void Main(string[] args)

{

foreach (var fileWithPath in GetFiles(fakeSourceLocation))

{

var fileName = GetFileName(fileWithPath);

var backupFileWithPath = Combine(fakeBackupLocation, fileName);

if (!Exists(backupFileWithPath))

{

Copy(fileWithPath, backupFileWithPath);

}

}

}

}

}

This won’t even build. Can you guess why? If you are very familiar with System.IO classes, you might have noticed that there is a System.IO.File.Exists() and a System.IO.Directory.Exists(). Our build error lets us know by saying, “The call is ambiguous between the following methods or properties: ‘Directory.Exists(string)’ and ‘File.Exists(string)'”. One way to get around this is to just be explicit at the ambiguous part like this:

using static System.IO.Directory;

using static System.IO.File;

using static System.IO.Path;

namespace CodeSandbox

{

class Program

{

static string fakeSourceLocation = @"c:\";

static string fakeBackupLocation = @"c:\backup\";

static void Main(string[] args)

{

foreach (var fileWithPath in GetFiles(fakeSourceLocation))

{

var fileName = GetFileName(fileWithPath);

var backupFileWithPath = Combine(fakeBackupLocation, fileName);

if (!System.IO.File.Exists(backupFileWithPath))

{

Copy(fileWithPath, backupFileWithPath);

}

}

}

}

}

I don’t know how that makes me feel. It seems like at least a small code smell. Maybe in these occasions, you don’t use this shortcut if it feels wrong. In general, I could see this being like the Great Var Debate of 2008™. People thought that var foo = new Bar(); was smelly compared to Bar foo = new Bar();, but it seems like most people have moved past it. Maybe I’m just on the beginning of this wave of change and I’ll stop yelling for the kids to get off of my lawn soon. At the moment, however, I’m probably going to file using static in the “not something I’m going to really use” pile.

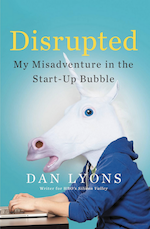

I recently finished reading a book by Dan Lyons called Disrupted: My Misadventure in the Start-Up Bubble on the recommendation of my friend Dustin Rogers. This book is an inside look at a company called HubSpot that – according to Lyons – exhibits some of the worst and most stereotypical traits of Silicon Valley companies. In this episode, I review the book and talk about some of the things that drive me nuts about these kinds of companies.

I recently finished reading a book by Dan Lyons called Disrupted: My Misadventure in the Start-Up Bubble on the recommendation of my friend Dustin Rogers. This book is an inside look at a company called HubSpot that – according to Lyons – exhibits some of the worst and most stereotypical traits of Silicon Valley companies. In this episode, I review the book and talk about some of the things that drive me nuts about these kinds of companies.

For this next post in

For this next post in  In today’s episode, I interview Erik Dietrich from Daedtech.com. Erik wrote a blog series about something he called “The Expert Beginner”. When I read that, I felt kind of convicted because I was worried that I was wreaking havoc all of the city with “Expert Beginnerdom”. Listen in to find out what an Expert Beginner is and if you are one or know one!

In today’s episode, I interview Erik Dietrich from Daedtech.com. Erik wrote a blog series about something he called “The Expert Beginner”. When I read that, I felt kind of convicted because I was worried that I was wreaking havoc all of the city with “Expert Beginnerdom”. Listen in to find out what an Expert Beginner is and if you are one or know one! This past week, someone’s comment that they “don’t blog, they solve problems instead” really struck a nerve with me. Not only because I’m a blogger, but because I know just how many times a day that my problems are solved because someone blogged the solution at some point in the past.

This past week, someone’s comment that they “don’t blog, they solve problems instead” really struck a nerve with me. Not only because I’m a blogger, but because I know just how many times a day that my problems are solved because someone blogged the solution at some point in the past.