This post is the second of three posts that I have planned in a little mini-series. Last time, we looked at What are Cryptographic Hashes? and this time, we’re going to talk about how to identify cryptographic hashes that you might find in the wild.

This post is the second of three posts that I have planned in a little mini-series. Last time, we looked at What are Cryptographic Hashes? and this time, we’re going to talk about how to identify cryptographic hashes that you might find in the wild.

In offensive security and during security audits, you’ll often encounter cryptographic hashes. These may be part of password dumps, logs, malware signatures, or other files. However, not all hashes are labeled clearly, and determining the type of hash used is essential for further analysis, especially when cracking or reversing it. Let’s dive into how you can identify cryptographic hashes in the wild and the tools that make the process easier.

This is important for a few reasons, but from an Offensive Security perspective, the biggest reason is that you often need to identify the type of hash you’re dealing with is to be able to crack it correctly. If you’re just using a huge, pre-computed rainbow table like I assume CrackStation uses, identifying them is less important because it just finds them as they are and returns the stored initial plain text value that was used to make the digest. In the case of other tools that you want to run specific wordlists against where you “hash on demand”, you have to know what you’re trying for before you start. So how do we do it?

Over time, you may develop some kind of sixth sense about it and be able to look at them and be pretty sure what you’re seeing. But, if you’re new or want to know the process that is going on behind the scenes, here’s a decision process. First, look at the length of the hash. Here are some lengths of some very popular hash types you’re likely to encounter. Length alone doesn’t always give you a definitive answer, but it helps rule out many possibilities.

- MD5: 32 hexadecimal characters (128 bits).

- SHA-1: 40 hexadecimal characters (160 bits).

- SHA-256: 64 hexadecimal characters (256 bits).

- SHA-512: 128 hexadecimal characters (512 bits).

- bcrypt: Typically around 60 characters (includes a salt).

Next thing you want to do is look for special formatting of the hash itself that might be unique to the hash’s “signature”.

- bcrypt hashes often start with $2a$, $2b$, or $2y$.

- MD5 is typically a straightforward 32-character hexadecimal string.

- NTLM (used in Windows environments) often has a 32-character hexadecimal format similar to MD5 but is distinct in purpose.

The next thing you want to do (honestly, maybe this should kind of be step 0 even) is consider the context of where the hash came from. It isn’t super likely that you just “found a hash on the ground”, you usually have some context with it and that context can give us some hints.

- Password Databases: If you’re analyzing a password database, it’s common to find hashes like bcrypt, PBKDF2, or even older ones like MD5.

- File Integrity Checks: If the hash is related to file integrity checks (e.g., software downloads), SHA-256 or SHA-512 is often used.

- Certificates and Signatures: Digital certificates or signatures may use SHA-256 or SHA-1, although SHA-1 has been largely deprecated.

Another thing to do is to look for evidence and distinctive markers at specific points in the hash. Some hash functions, particularly those used for passwords (like bcrypt, PBKDF2, and Argon2), employ salting and iterations to increase security. The salt is a random value added to the input to ensure the same passwords don’t produce identical hashes. Hashes with salts often have longer lengths and may include markers or delimiters in the format, like the way you might find a Bcrypt hash that starts with ‘$2a$10$…’ where ’10’ is the cost factor.

So if you found a hash like $2y$12$EXRkfkdmXn2gzds2SSituJWMqp3hPFO4lH/vqFhD8aJL.lfwBby4a during a penetration test and you figure out that $2y$ indicates the algorithm is bcrypt and 12 indicates the number of iterations (also called the cost factor). You can then use a tool like John the Ripper or Hashcat with your favorite wordlists, specifying bcrypt as the algorithm.

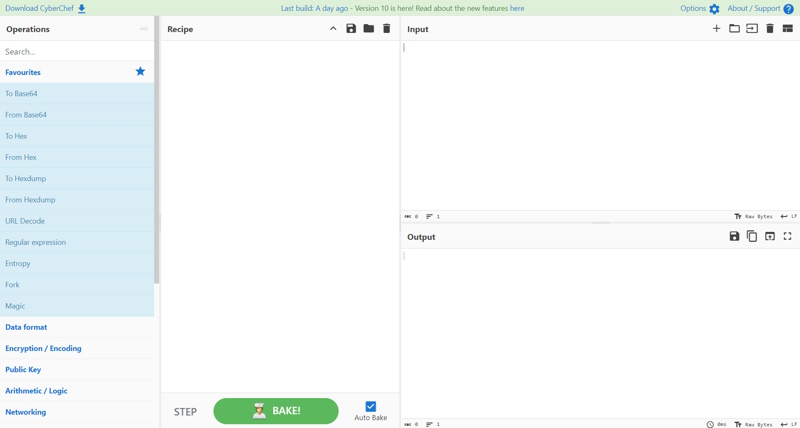

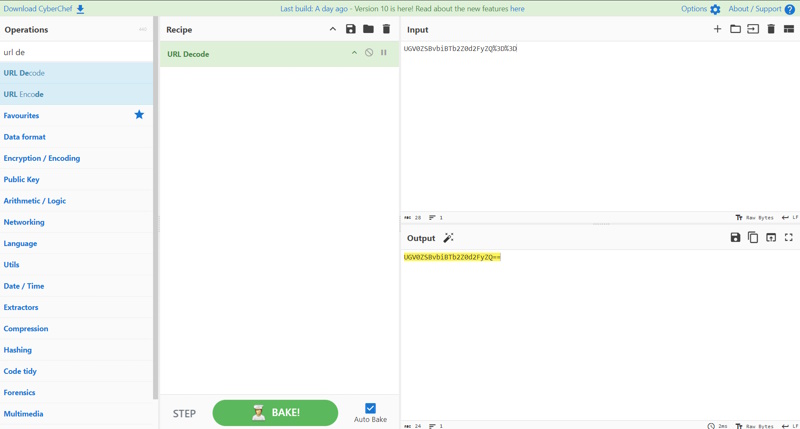

The next step (maybe it might be your first step) is to try using some tools to help you. The truth is that these tools apply some of the heuristics that I’ve already outlined (and many more that I haven’t) in order to figure out what you’re dealing with. One of the most popular is called hashID (which replaced hash-identifier), a Python tool that you can install with pip and then call from the command line. Here is an example of me using it to identify some hashes.

$ hashid '5d41402abc4b2a76b9719d911017c592' Analyzing '5d41402abc4b2a76b9719d911017c592' [+] MD2 [+] MD5 [+] MD4 [+] Double MD5 [+] LM [+] RIPEMD-128 [+] Haval-128 [+] Tiger-128 [+] Skein-256(128) [+] Skein-512(128) [+] Lotus Notes/Domino 5 [+] Skype [+] Snefru-128 [+] NTLM [+] Domain Cached Credentials [+] Domain Cached Credentials 2 [+] DNSSEC(NSEC3) [+] RAdmin v2.x $ hashid '$2y$12$EXRkfkdmXn2gzds2SSituJWMqp3hPFO4lH/vqFhD8aJL.lfwBby4a' Analyzing '$2y$12$EXRkfkdmXn2gzds2SSituJWMqp3hPFO4lH/vqFhD8aJL.lfwBby4a' [+] Blowfish(OpenBSD) [+] Woltlab Burning Board 4.x [+] bcrypt

You’ll notice that sometimes, you get a LOT of possible results. That’s why I personally put the tools a little lower on the list. You will want to use context to try to figure out which of those suggestions is most likely. From there, you may have to try a few different times to crack the hash, but you should have some kind of logic to the order. Consider the context, the technology stack, when it was written, what you know about the development team, etc. You may see some people suggest tools like OnlineHashCrack (use the menu to go to Free Tools, then Hash Identification). The direct link is here, but that can change. Unless you can’t install hashID on your system for some reason, I’d just use hashID. Online Hash Crack literally seems to be using something like hash-identifier under the hood, as the results are the very similar.

Hash Identifier

+-$ hash-identifier 5d41402abc4b2a76b9719d911017c592 ######################################################################### # __ __ __ ______ _____ # # /\ \/\ \ /\ \ /\__ _\ /\ _ `\ # # \ \ \_\ \ __ ____ \ \ \___ \/_/\ \/ \ \ \/\ \ # # \ \ _ \ /'__`\ / ,__\ \ \ _ `\ \ \ \ \ \ \ \ \ # # \ \ \ \ \/\ \_\ \_/\__, `\ \ \ \ \ \ \_\ \__ \ \ \_\ \ # # \ \_\ \_\ \___ \_\/\____/ \ \_\ \_\ /\_____\ \ \____/ # # \/_/\/_/\/__/\/_/\/___/ \/_/\/_/ \/_____/ \/___/ v1.2 # # By Zion3R # # www.Blackploit.com # # Root@Blackploit.com # ######################################################################### -------------------------------------------------- Possible Hashs: [+] MD5 [+] Domain Cached Credentials - MD4(MD4(($pass)).(strtolower($username))) Least Possible Hashs: [+] RAdmin v2.x [+] NTLM [+] MD4 [+] MD2 [+] MD5(HMAC) [+] MD4(HMAC) [+] MD2(HMAC) [+] MD5(HMAC(WordPress)) [+] Haval-128 [+] Haval-128(HMAC) [+] RipeMD-128 [+] RipeMD-128(HMAC) [+] SNEFRU-128 [+] SNEFRU-128(HMAC) [+] Tiger-128 [+] Tiger-128(HMAC) [+] md5($pass.$salt) [+] md5($salt.$pass) [+] md5($salt.$pass.$salt) [+] md5($salt.$pass.$username) [+] md5($salt.md5($pass)) [+] md5($salt.md5($pass)) [+] md5($salt.md5($pass.$salt)) [+] md5($salt.md5($pass.$salt)) [+] md5($salt.md5($salt.$pass)) [+] md5($salt.md5(md5($pass).$salt)) [+] md5($username.0.$pass) [+] md5($username.LF.$pass) [+] md5($username.md5($pass).$salt) [+] md5(md5($pass)) [+] md5(md5($pass).$salt) [+] md5(md5($pass).md5($salt)) [+] md5(md5($salt).$pass) [+] md5(md5($salt).md5($pass)) [+] md5(md5($username.$pass).$salt) [+] md5(md5(md5($pass))) [+] md5(md5(md5(md5($pass)))) [+] md5(md5(md5(md5(md5($pass))))) [+] md5(sha1($pass)) [+] md5(sha1(md5($pass))) [+] md5(sha1(md5(sha1($pass)))) [+] md5(strtoupper(md5($pass))) --------------------------------------------------

OnlineHashCrack

Input: 5d41402abc4b2a76b9719d911017c592 Results: Your hash may be one of the following: - MD2 - MD5 - MD4 - Double MD5 - LM - RIPEMD-128 - Haval-128 - Tiger-128 - Skein-256(128) - Skein-512(128) - Lotus Notes/Domino 5 - Skype - ZipMonster - PrestaShop - md5(md5(md5($pass))) - md5(strtoupper(md5($pass))) - md5(sha1($pass)) - md5($pass.$salt) - md5($salt.$pass) - md5(unicode($pass).$salt) - md5($salt.unicode($pass)) - HMAC-MD5 (key = $pass) - HMAC-MD5 (key = $salt) - md5(md5($salt).$pass) - md5($salt.md5($pass)) - md5($pass.md5($salt)) - md5($salt.$pass.$salt) - md5(md5($pass).md5($salt)) - md5($salt.md5($salt.$pass)) - md5($salt.md5($pass.$salt)) - md5($username.0.$pass) - Snefru-128 - NTLM - Domain Cached Credentials - Domain Cached Credentials 2 - DNSSEC(NSEC3) - RAdmin v2.x - Cisco Type 7

It is possible that some tools that you use to crack the hashes have some built-in identifiers (like John the Ripper), but they don’t work independently.

Identifying cryptographic hashes is a critical skill during security audits or penetration tests. With so many different algorithms in use, the ability to quickly pinpoint the hash type allows you to assess security, crack passwords, and verify data integrity. By leveraging online tools, command-line utilities like hashID, and your understanding of hash lengths and formats, you can efficiently identify the most commonly used hashes.

In the next post, we’ll explore how to crack these hashes, using both brute force and other techniques.

This post is the first of three posts that I have planned in a little mini-series. I want to post about What are Cryptographic Hashes?, then Identifying Hashes, and finally Cracking Hashes.

This post is the first of three posts that I have planned in a little mini-series. I want to post about What are Cryptographic Hashes?, then Identifying Hashes, and finally Cracking Hashes. Last month, I started a series about tools and utilities that are good to know with a

Last month, I started a series about tools and utilities that are good to know with a

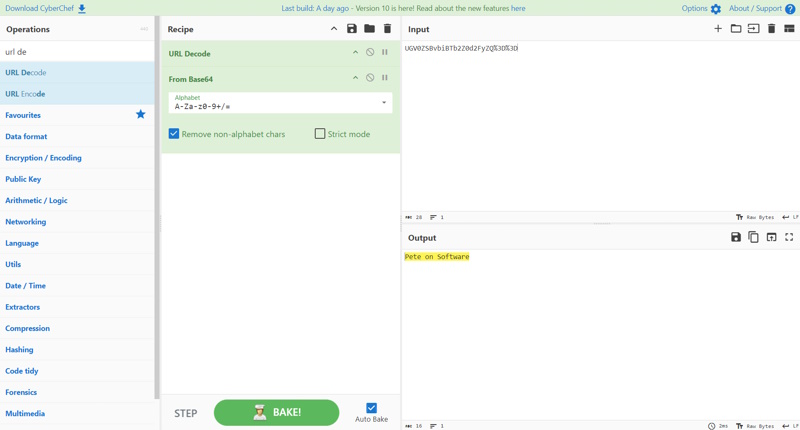

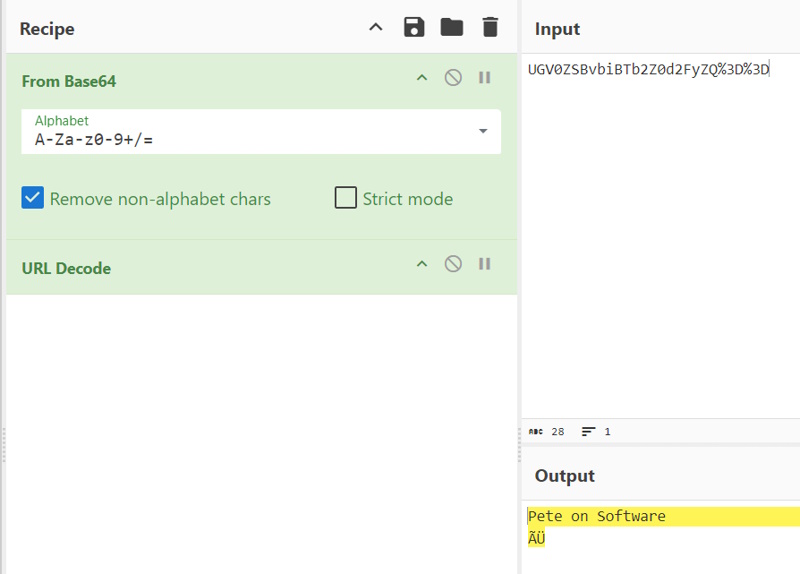

As all bloggers eventually find out, two of the best reasons to write blog posts are either to document something for yourself for later or to force yourself to learn something well enough to explain it to others. That’s the impetus of this series that I plan on doing from time to time. I want to get more familiar with some of these core tools and also have a reference / resource available that is in “Pete Think” so I can quickly find what I need to use these tools if I forget.

As all bloggers eventually find out, two of the best reasons to write blog posts are either to document something for yourself for later or to force yourself to learn something well enough to explain it to others. That’s the impetus of this series that I plan on doing from time to time. I want to get more familiar with some of these core tools and also have a reference / resource available that is in “Pete Think” so I can quickly find what I need to use these tools if I forget.